Compliance & Ethics

Why Explainable AI (XAI) is Essential for Modern Industries

Why Explainable AI (XAI) is Essential for Modern Industries

Sep 27, 2024

Sep 27, 2024

Sep 27, 2024

15

Min Read

Min Read

In this digital age, AI has reached considerable development during the last several years and is expected to dramatically revolutionize various industries starting from healthcare, finance, autonomous vehicles, and more. To me, it appears as if its future applications are limitless. Yet, as powerful as AI is, there's an inherent challenge: most AI models are holistic systems whose operations are quite hard to decipher as they provide results without explaining how those results were obtained. That is when Explainable AI (XAI) comes into play and it is a savior for all of us who are keen on technologies, at least in my opinion, XAI is more about making those systems certain a bit more transparent and thereby more amenable to interpretation. In this blog, which is devoted to the exposition of XAI, I decided to start with the definition of the viewpoint of interpretability within data science as well as understanding how it helps to develop better trust between essential AI technology and its end-user.

1. What is Explainable AI (XAI)?

Explainable AI (XAI) refers to the techniques and tools used to make the decisions of AI systems more transparent and interpretable to humans. Traditional AI models, especially deep learning models, are often so complex that their inner workings are inscrutable even to experts. While these models may perform exceptionally well, their lack of transparency makes it difficult to understand why a specific prediction or decision was made.

XAI addresses this by providing human-readable explanations for AI models' outputs. Whether through visualizations, summaries, or feature importance rankings, XAI strives to answer the "why" and "how" behind AI decisions. This transparency can help instill trust, especially in high-stakes areas such as healthcare, finance, and autonomous vehicles.

2. Why Does AI Need to Be Explainable?

The need for explainability in AI is driven by several factors, including ethical concerns, legal implications, and technical challenges. Here’s why AI needs to be explainable:

A. Ethical Concerns:

When AI systems make decisions that impact human lives, transparency becomes a necessity. Consider healthcare systems that predict patient diagnoses or recommend treatments. Without understanding why certain recommendations are made, trust in AI-driven decisions may erode, especially if the system makes errors or biases emerge.

B. Regulatory Requirements:

With regulations like the European Union’s General Data Protection Regulation (GDPR), there is a legal push for explainable AI. GDPR mandates that individuals have the right to understand the reasoning behind automated decisions that affect them significantly, such as credit scoring or hiring processes.

C. Trust and Accountability:

Businesses are increasingly relying on AI for critical decisions. In finance, for instance, AI models are used to assess loan eligibility or detect fraudulent transactions. A lack of explainability makes it difficult for organizations to justify their decisions, leaving them open to legal scrutiny or customer backlash when things go wrong.

D. Debugging and Improving Models:

Explainability also plays a technical role in debugging AI models. Data scientists need to understand why a model behaves in a certain way to improve its performance or address issues like bias. Without clear explanations, improving models can be akin to shooting in the dark.

3. Interpretability vs. Explainability: What’s the Difference?

Though the terms "interpretability" and "explainability" are often used interchangeably, they refer to different aspects of understanding AI models.

Interpretability refers to the extent to which a human can understand the cause of a decision or prediction made by the model. In simpler terms, it’s about how intuitive the relationship between the input and output is. For instance, linear regression models are considered interpretable because the relationships between input variables and the output are straightforward.

Explainability goes a step further by providing explanations that clarify why the model made a specific decision. This involves techniques that create human-friendly narratives, visualizations, or rankings of feature importance.

While interpretability deals with simpler models, explainability can be applied even to complex, opaque models, making them understandable to users without needing deep technical knowledge.

4. The Black-Box Problem in AI

The term “black box” in AI refers to models whose internal logic and workings are hidden or too complex for humans to comprehend. Many modern AI systems, especially those relying on deep neural networks, fall into this category. Despite their success, black-box models are a cause for concern due to their opacity.

A. Risks of Black-Box Models:

Bias and Discrimination: Without transparency, it’s difficult to detect if a model is biased or if it systematically discriminates against certain groups. For example, an AI hiring system might be biased against candidates from specific demographics without anyone realizing it.

Trust Deficit: Black-box models make it hard for stakeholders—whether customers, regulators, or even developers—to trust AI decisions. When a model makes an unexpected or incorrect decision, stakeholders are left in the dark as to why.

Legal Compliance: In highly regulated industries like finance or healthcare, lack of transparency can pose legal challenges. A company using a black-box model may struggle to explain to regulators why a decision was made, potentially resulting in non-compliance with laws such as GDPR or HIPAA.

5. Types of Explainable AI Methods

There are various methods of making AI models explainable, each with its strengths and weaknesses. These methods can be broadly categorized into two types: intrinsic and post-hoc explanations.

A. Intrinsic Explanations:

These methods involve building interpretable models from the ground up. Examples include:

Linear Models: Models like linear regression or logistic regression that inherently provide transparency through coefficients.

Decision Trees: A tree-like model where each decision node explains how the decision is made, making it intuitive and easy to follow.

Rule-Based Models: These models use if-then rules, allowing users to see the logic behind decisions.

B. Post-Hoc Explanations:

For complex models like neural networks, explainability is often introduced after the model has been trained. These post-hoc methods aim to explain decisions without altering the original model’s structure.

LIME (Local Interpretable Model-agnostic Explanations): This method generates local approximations to explain individual predictions of any machine learning model.

SHAP (SHapley Additive exPlanations): SHAP assigns each feature an importance value for a particular prediction, offering a clear breakdown of how input variables contribute to an output.

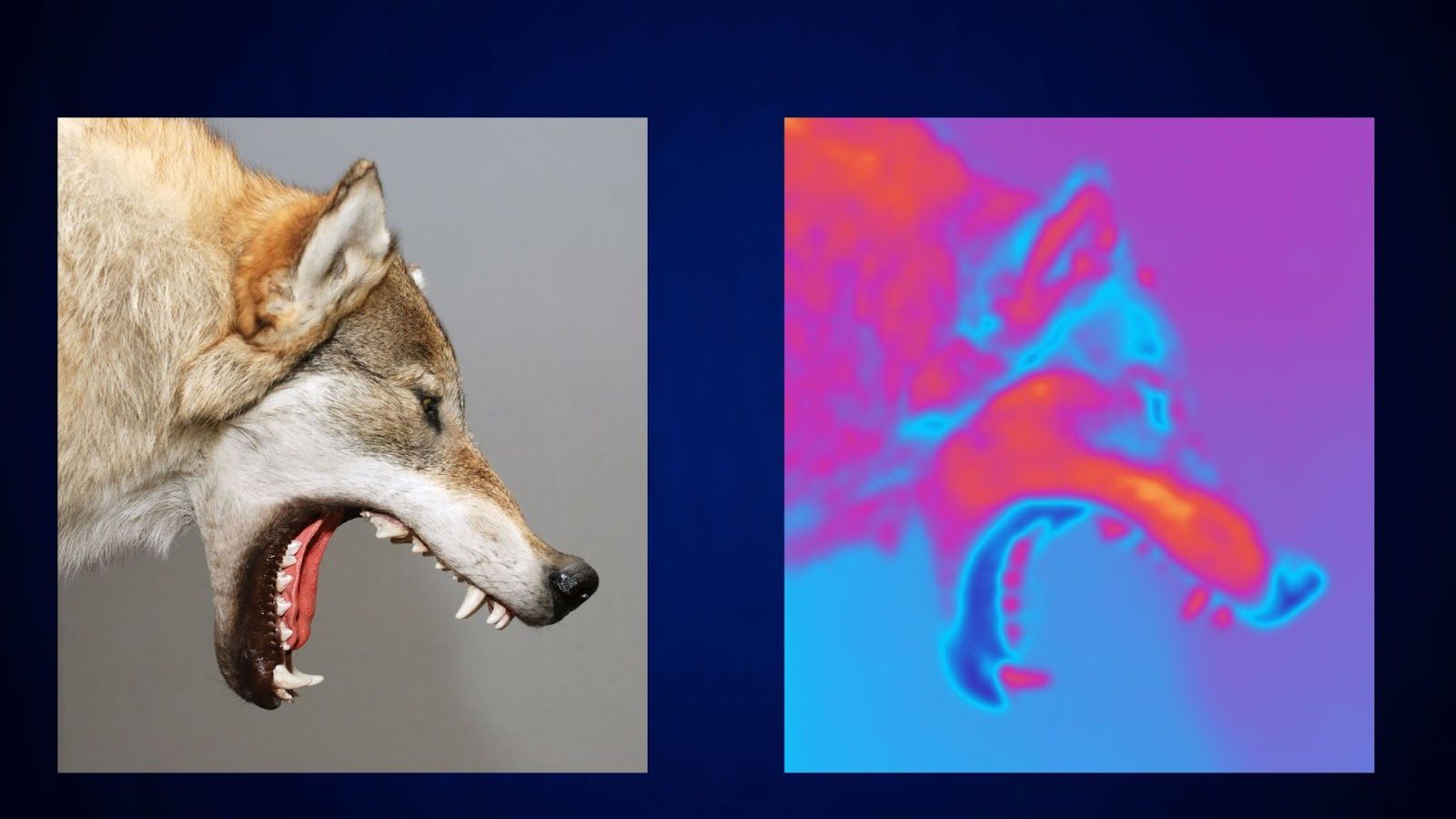

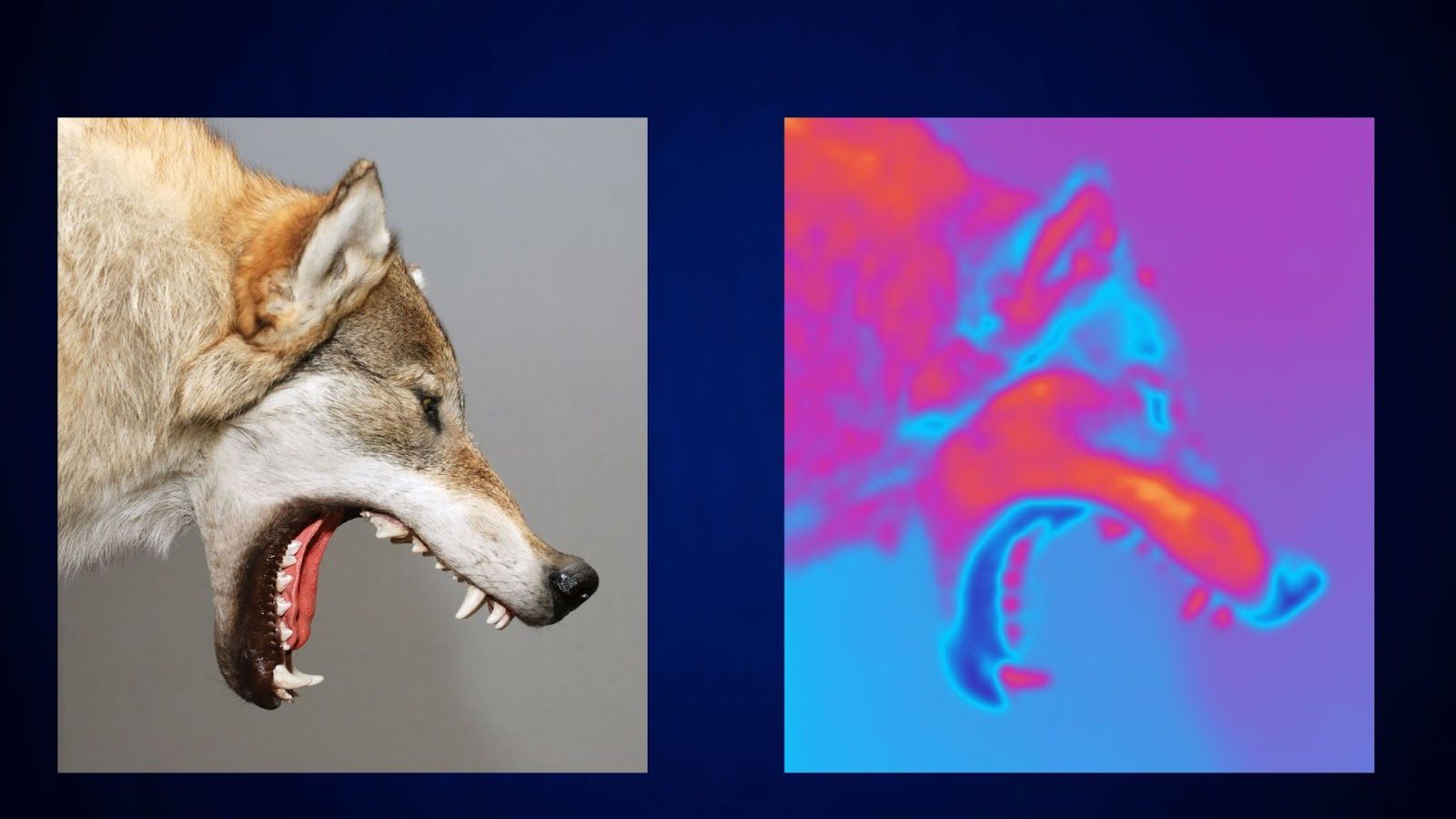

Saliency Maps: Used primarily in deep learning for image recognition, these maps highlight areas of an image that the model found most relevant to its prediction.

6. Interpretable Machine Learning Techniques

Several interpretable machine learning techniques are frequently used in data science to ensure that models can be easily understood by both experts and non-experts.

A. Feature Importance:

Feature importance methods rank the input features according to their contribution to the model’s output. Tools like SHAP or LIME can provide insights into which features are driving a model's predictions. This is useful when trying to understand why a model is favoring certain data points over others.

B. Partial Dependence Plots (PDP):

PDPs visualize the relationship between an input feature and the predicted outcome while marginalizing over other features. This helps explain how changes in one variable affect the model's predictions.

C. Surrogate Models:

Surrogate models are simpler models that approximate the behavior of a more complex black-box model. For example, a decision tree might be trained to mimic the behavior of a deep neural network, making it easier to explain the network’s predictions.

7. Model-Agnostic vs. Model-Specific Explanations

When discussing explainability, it’s essential to distinguish between model-agnostic and model-specific approaches.

Model-Agnostic:

These techniques can be applied to any machine learning model, regardless of its underlying structure. Methods like LIME and SHAP fall into this category. Their versatility makes them suitable for explaining a wide range of models, from decision trees to deep neural networks.

Model-Specific:

These methods are tailored for specific types of models. For example, decision trees inherently provide explanations through their structure. Another example is convolutional neural networks (CNNs), which can use visualization techniques like saliency maps to explain image classifications.

8. Case Studies in XAI: Real-World Applications

A. Healthcare:

AI models in healthcare have the potential to revolutionize diagnostics, but they must be explainable to gain the trust of doctors and patients. For instance, IBM's Watson for Oncology uses machine learning to assist oncologists in cancer treatment decisions. However, its explainability is crucial for ensuring that doctors understand the reasoning behind Watson’s recommendations.

B. Finance:

In the finance industry, AI models are used to assess creditworthiness, detect fraud, and make trading decisions. Companies like FICO use explainable AI to ensure that their credit scoring models are transparent and non-discriminatory, complying with regulatory requirements.

C. Autonomous Vehicles:

Explainability is also critical in the development of autonomous vehicles. These vehicles rely on complex AI systems to navigate roads and make real-time decisions. Understanding why a car chose a specific action, like braking or swerving, is essential in ensuring safety and accountability in case of accidents.

9. The Future of XAI in Data Science

A. Democratization of AI

As XAI methods become more advanced, they are likely to help in the democratization of AI by making machine learning models more accessible to a broader audience. This includes not only technical experts but also non-technical users, decision-makers, and even the general public. By enabling users to understand how and why AI systems reach certain conclusions, XAI can foster greater acceptance of AI solutions across industries.

B. Enhanced Collaboration

Explainable AI also opens the door for better collaboration between humans and AI. In fields like healthcare and law enforcement, where human expertise is indispensable, XAI can act as a bridge, allowing experts to work alongside AI systems with greater confidence. Rather than replacing humans, AI can enhance decision-making processes, providing insights that experts can easily interpret and validate.

C. Regulatory Compliance and Ethical AI

Governments and regulatory bodies worldwide are grappling with the ethical implications of AI. In sectors such as finance, healthcare, and criminal justice, XAI will be essential for meeting regulatory requirements that demand transparency and fairness. Companies that leverage explainable models will not only be more compliant but also more competitive, as trust in AI systems becomes a key differentiator.

D. Improved AI Development and Debugging

XAI provides invaluable tools for data scientists and AI engineers. By offering insights into model behavior, it makes it easier to debug, fine-tune, and improve models. AI development will increasingly rely on explainability as a core component of model evaluation, ensuring that models are not only accurate but also interpretable.

10. Challenges and Limitations of XAI

While XAI offers significant advantages, it is not without its challenges. Achieving full explainability, especially in highly complex models like deep neural networks, remains an ongoing area of research. Here are some key challenges that need to be addressed:

A. Trade-off Between Accuracy and Interpretability

One of the primary challenges in XAI is balancing accuracy with interpretability. Often, the most accurate models—such as deep learning or ensemble methods—are also the most difficult to interpret. Simplifying a model to make it more explainable may reduce its accuracy, leading to a trade-off that must be carefully managed.

B. Scalability

As AI models grow in size and complexity, so too does the challenge of explaining them. Techniques like SHAP and LIME, while effective, can become computationally expensive when applied to large-scale models or datasets. Scalability is a critical issue that needs to be solved for XAI methods to be viable in big data environments.

C. Subjectivity in Explanations

Explanations provided by XAI methods are not always definitive or objective. For instance, different techniques may produce slightly different explanations for the same model prediction. This subjectivity can be confusing, especially when different stakeholders are involved, each requiring explanations tailored to their needs and expertise.

D. Evolving Models

AI models are often updated or retrained over time as new data becomes available. This creates a challenge for XAI, as explanations that were once valid may no longer apply after the model evolves. Continuous monitoring and updating of explanations are necessary to ensure that they remain relevant.

Conclusion: The Path Forward for XAI in Data Science

Explainable AI is a critical development in the field of artificial intelligence, addressing the growing need for transparency, trust, and accountability in machine learning models. As AI becomes more pervasive across industries, XAI will serve as a key enabler for the responsible deployment of AI technologies.

For data scientists, embracing XAI offers numerous benefits, from building more ethical and compliant AI systems to improving model development and fostering better collaboration between humans and machines. However, challenges such as scalability, subjectivity, and the trade-off between accuracy and interpretability must be carefully navigated.

In the future, we can expect the field of XAI to continue evolving, driven by advancements in both research and regulatory demands. With the right balance of innovation and oversight, XAI has the potential to unlock the full power of AI—making it not just smarter, but also more human.

In this digital age, AI has reached considerable development during the last several years and is expected to dramatically revolutionize various industries starting from healthcare, finance, autonomous vehicles, and more. To me, it appears as if its future applications are limitless. Yet, as powerful as AI is, there's an inherent challenge: most AI models are holistic systems whose operations are quite hard to decipher as they provide results without explaining how those results were obtained. That is when Explainable AI (XAI) comes into play and it is a savior for all of us who are keen on technologies, at least in my opinion, XAI is more about making those systems certain a bit more transparent and thereby more amenable to interpretation. In this blog, which is devoted to the exposition of XAI, I decided to start with the definition of the viewpoint of interpretability within data science as well as understanding how it helps to develop better trust between essential AI technology and its end-user.

1. What is Explainable AI (XAI)?

Explainable AI (XAI) refers to the techniques and tools used to make the decisions of AI systems more transparent and interpretable to humans. Traditional AI models, especially deep learning models, are often so complex that their inner workings are inscrutable even to experts. While these models may perform exceptionally well, their lack of transparency makes it difficult to understand why a specific prediction or decision was made.

XAI addresses this by providing human-readable explanations for AI models' outputs. Whether through visualizations, summaries, or feature importance rankings, XAI strives to answer the "why" and "how" behind AI decisions. This transparency can help instill trust, especially in high-stakes areas such as healthcare, finance, and autonomous vehicles.

2. Why Does AI Need to Be Explainable?

The need for explainability in AI is driven by several factors, including ethical concerns, legal implications, and technical challenges. Here’s why AI needs to be explainable:

A. Ethical Concerns:

When AI systems make decisions that impact human lives, transparency becomes a necessity. Consider healthcare systems that predict patient diagnoses or recommend treatments. Without understanding why certain recommendations are made, trust in AI-driven decisions may erode, especially if the system makes errors or biases emerge.

B. Regulatory Requirements:

With regulations like the European Union’s General Data Protection Regulation (GDPR), there is a legal push for explainable AI. GDPR mandates that individuals have the right to understand the reasoning behind automated decisions that affect them significantly, such as credit scoring or hiring processes.

C. Trust and Accountability:

Businesses are increasingly relying on AI for critical decisions. In finance, for instance, AI models are used to assess loan eligibility or detect fraudulent transactions. A lack of explainability makes it difficult for organizations to justify their decisions, leaving them open to legal scrutiny or customer backlash when things go wrong.

D. Debugging and Improving Models:

Explainability also plays a technical role in debugging AI models. Data scientists need to understand why a model behaves in a certain way to improve its performance or address issues like bias. Without clear explanations, improving models can be akin to shooting in the dark.

3. Interpretability vs. Explainability: What’s the Difference?

Though the terms "interpretability" and "explainability" are often used interchangeably, they refer to different aspects of understanding AI models.

Interpretability refers to the extent to which a human can understand the cause of a decision or prediction made by the model. In simpler terms, it’s about how intuitive the relationship between the input and output is. For instance, linear regression models are considered interpretable because the relationships between input variables and the output are straightforward.

Explainability goes a step further by providing explanations that clarify why the model made a specific decision. This involves techniques that create human-friendly narratives, visualizations, or rankings of feature importance.

While interpretability deals with simpler models, explainability can be applied even to complex, opaque models, making them understandable to users without needing deep technical knowledge.

4. The Black-Box Problem in AI

The term “black box” in AI refers to models whose internal logic and workings are hidden or too complex for humans to comprehend. Many modern AI systems, especially those relying on deep neural networks, fall into this category. Despite their success, black-box models are a cause for concern due to their opacity.

A. Risks of Black-Box Models:

Bias and Discrimination: Without transparency, it’s difficult to detect if a model is biased or if it systematically discriminates against certain groups. For example, an AI hiring system might be biased against candidates from specific demographics without anyone realizing it.

Trust Deficit: Black-box models make it hard for stakeholders—whether customers, regulators, or even developers—to trust AI decisions. When a model makes an unexpected or incorrect decision, stakeholders are left in the dark as to why.

Legal Compliance: In highly regulated industries like finance or healthcare, lack of transparency can pose legal challenges. A company using a black-box model may struggle to explain to regulators why a decision was made, potentially resulting in non-compliance with laws such as GDPR or HIPAA.

5. Types of Explainable AI Methods

There are various methods of making AI models explainable, each with its strengths and weaknesses. These methods can be broadly categorized into two types: intrinsic and post-hoc explanations.

A. Intrinsic Explanations:

These methods involve building interpretable models from the ground up. Examples include:

Linear Models: Models like linear regression or logistic regression that inherently provide transparency through coefficients.

Decision Trees: A tree-like model where each decision node explains how the decision is made, making it intuitive and easy to follow.

Rule-Based Models: These models use if-then rules, allowing users to see the logic behind decisions.

B. Post-Hoc Explanations:

For complex models like neural networks, explainability is often introduced after the model has been trained. These post-hoc methods aim to explain decisions without altering the original model’s structure.

LIME (Local Interpretable Model-agnostic Explanations): This method generates local approximations to explain individual predictions of any machine learning model.

SHAP (SHapley Additive exPlanations): SHAP assigns each feature an importance value for a particular prediction, offering a clear breakdown of how input variables contribute to an output.

Saliency Maps: Used primarily in deep learning for image recognition, these maps highlight areas of an image that the model found most relevant to its prediction.

6. Interpretable Machine Learning Techniques

Several interpretable machine learning techniques are frequently used in data science to ensure that models can be easily understood by both experts and non-experts.

A. Feature Importance:

Feature importance methods rank the input features according to their contribution to the model’s output. Tools like SHAP or LIME can provide insights into which features are driving a model's predictions. This is useful when trying to understand why a model is favoring certain data points over others.

B. Partial Dependence Plots (PDP):

PDPs visualize the relationship between an input feature and the predicted outcome while marginalizing over other features. This helps explain how changes in one variable affect the model's predictions.

C. Surrogate Models:

Surrogate models are simpler models that approximate the behavior of a more complex black-box model. For example, a decision tree might be trained to mimic the behavior of a deep neural network, making it easier to explain the network’s predictions.

7. Model-Agnostic vs. Model-Specific Explanations

When discussing explainability, it’s essential to distinguish between model-agnostic and model-specific approaches.

Model-Agnostic:

These techniques can be applied to any machine learning model, regardless of its underlying structure. Methods like LIME and SHAP fall into this category. Their versatility makes them suitable for explaining a wide range of models, from decision trees to deep neural networks.

Model-Specific:

These methods are tailored for specific types of models. For example, decision trees inherently provide explanations through their structure. Another example is convolutional neural networks (CNNs), which can use visualization techniques like saliency maps to explain image classifications.

8. Case Studies in XAI: Real-World Applications

A. Healthcare:

AI models in healthcare have the potential to revolutionize diagnostics, but they must be explainable to gain the trust of doctors and patients. For instance, IBM's Watson for Oncology uses machine learning to assist oncologists in cancer treatment decisions. However, its explainability is crucial for ensuring that doctors understand the reasoning behind Watson’s recommendations.

B. Finance:

In the finance industry, AI models are used to assess creditworthiness, detect fraud, and make trading decisions. Companies like FICO use explainable AI to ensure that their credit scoring models are transparent and non-discriminatory, complying with regulatory requirements.

C. Autonomous Vehicles:

Explainability is also critical in the development of autonomous vehicles. These vehicles rely on complex AI systems to navigate roads and make real-time decisions. Understanding why a car chose a specific action, like braking or swerving, is essential in ensuring safety and accountability in case of accidents.

9. The Future of XAI in Data Science

A. Democratization of AI

As XAI methods become more advanced, they are likely to help in the democratization of AI by making machine learning models more accessible to a broader audience. This includes not only technical experts but also non-technical users, decision-makers, and even the general public. By enabling users to understand how and why AI systems reach certain conclusions, XAI can foster greater acceptance of AI solutions across industries.

B. Enhanced Collaboration

Explainable AI also opens the door for better collaboration between humans and AI. In fields like healthcare and law enforcement, where human expertise is indispensable, XAI can act as a bridge, allowing experts to work alongside AI systems with greater confidence. Rather than replacing humans, AI can enhance decision-making processes, providing insights that experts can easily interpret and validate.

C. Regulatory Compliance and Ethical AI

Governments and regulatory bodies worldwide are grappling with the ethical implications of AI. In sectors such as finance, healthcare, and criminal justice, XAI will be essential for meeting regulatory requirements that demand transparency and fairness. Companies that leverage explainable models will not only be more compliant but also more competitive, as trust in AI systems becomes a key differentiator.

D. Improved AI Development and Debugging

XAI provides invaluable tools for data scientists and AI engineers. By offering insights into model behavior, it makes it easier to debug, fine-tune, and improve models. AI development will increasingly rely on explainability as a core component of model evaluation, ensuring that models are not only accurate but also interpretable.

10. Challenges and Limitations of XAI

While XAI offers significant advantages, it is not without its challenges. Achieving full explainability, especially in highly complex models like deep neural networks, remains an ongoing area of research. Here are some key challenges that need to be addressed:

A. Trade-off Between Accuracy and Interpretability

One of the primary challenges in XAI is balancing accuracy with interpretability. Often, the most accurate models—such as deep learning or ensemble methods—are also the most difficult to interpret. Simplifying a model to make it more explainable may reduce its accuracy, leading to a trade-off that must be carefully managed.

B. Scalability

As AI models grow in size and complexity, so too does the challenge of explaining them. Techniques like SHAP and LIME, while effective, can become computationally expensive when applied to large-scale models or datasets. Scalability is a critical issue that needs to be solved for XAI methods to be viable in big data environments.

C. Subjectivity in Explanations

Explanations provided by XAI methods are not always definitive or objective. For instance, different techniques may produce slightly different explanations for the same model prediction. This subjectivity can be confusing, especially when different stakeholders are involved, each requiring explanations tailored to their needs and expertise.

D. Evolving Models

AI models are often updated or retrained over time as new data becomes available. This creates a challenge for XAI, as explanations that were once valid may no longer apply after the model evolves. Continuous monitoring and updating of explanations are necessary to ensure that they remain relevant.

Conclusion: The Path Forward for XAI in Data Science

Explainable AI is a critical development in the field of artificial intelligence, addressing the growing need for transparency, trust, and accountability in machine learning models. As AI becomes more pervasive across industries, XAI will serve as a key enabler for the responsible deployment of AI technologies.

For data scientists, embracing XAI offers numerous benefits, from building more ethical and compliant AI systems to improving model development and fostering better collaboration between humans and machines. However, challenges such as scalability, subjectivity, and the trade-off between accuracy and interpretability must be carefully navigated.

In the future, we can expect the field of XAI to continue evolving, driven by advancements in both research and regulatory demands. With the right balance of innovation and oversight, XAI has the potential to unlock the full power of AI—making it not just smarter, but also more human.

Compliance & Ethics

Compliance & Ethics

Let’s Solve Your Biggest Challenges with AI

Looking to save time, reduce risks, stay compliant, or get ahead? Claris AI delivers real results—let’s talk!

Stay Informed on AI and Compliance

Subscribe to our newsletter for the latest updates on AI solutions, compliance strategies, and industry insights.

Stay Informed on AI and Compliance

Subscribe to our newsletter for the latest updates on AI solutions, compliance strategies, and industry insights.

Stay Informed on AI and Compliance

Subscribe to our newsletter for the latest updates on AI solutions, compliance strategies, and industry insights.

Stay Informed on AI and Compliance

Subscribe to our newsletter for the latest updates on AI solutions, compliance strategies, and industry insights.